This isn’t meant to be a comprehensive article about risk management (that’s a topic unto itself) nor is it about specific frameworks or go-to-market strategies. Rather, I’m highlighting something that some seem to be overlooking, and hope others are carefully implementing in their AI initiatives.

I have found myself daydreaming about deploying my own AI-powered application (and have come close) one of these days. The more I talk to my engineering friends and colleagues, though, the more I realize that the “vibe coding” experience, as thrilling and exhilarating as it is, cannot be taken lightly.

Do I think the democratization of software development is bad and we should avoid vibe coding? No, absolutely not. I just think some people aren’t realizing the implications of their actions. Seeing something online that you’ve deployed and co-created with AI is an incredible feeling, especially if you’ve never done it before or have always dreamed about it but couldn’t until now.

Maybe is juts my lawyer “hat”, but felt it was worth sharing.

This doesn’t mean you can’t build responsibly while following good engineering practices with AI. Rather, it means taking a human-centric and sound and proved engineering best practices approach instead of focusing solely on operational efficiency.

While vibe coding isn’t inherently bad, we must acknowledge that some of our current lack of best practices could have long-term detrimental effects.

In my own journey, I’ve often started development only to discover I’m missing crucial engineering knowledge needed to build responsibly and viably, even thought it felt it resembled what I usually see on well-developed projects.

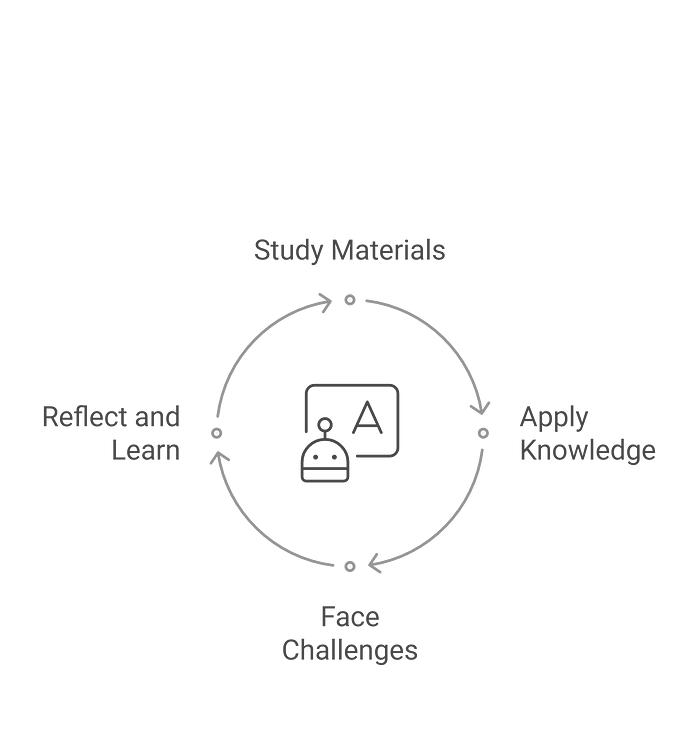

While seeing AI-generated code materialize a vision on screen is exciting (offering a glimpse into the future) my constant attempt to deepen my knowledge has repeatedly forced me to pause, reassess, and return to learning. This includes studying topics like programming 101, pseudo-code to instruct the LLM in a better way, manual QA, automation frameworks, effective prompt crafting, DevOps, CI/CD workflows, Git, product management, and even proper configuration of tools like Cursor or Windsurf.

It often feels like I’m taking three steps forward and ten back as I revisit study materials to grasp frameworks, processes, and high-level architectural concepts needed for production-ready applications. This journey has humbled me — revealing that while I can direct an AI language model to perform tasks, doing so properly is an entirely different challenge. But at the same time I am so happy to have gone through this as it has allowed me to communicate at a much more deeper level with my technical peers, clients, etc. It does make a difference!

As I was reading and watching some articles/posts on LinkedIn the other day, it came to me that a topic that sometimes we forget is that companies could be deploying applications that are missing the basic risk management or AI governance. Basic principles, policies, and procedures needed for a well-structured and long-term business idea.

Again, this is just my view and opinion between being a lawyer, business person, and especially immersed hands-on in AI (not just vibe coding 🙂).

Framing the idea

Imagine you’re planning to create an application that generates bedtime stories for kids using AI-powered features and language models.

At first glance, the application seems harmless — just a well-intentioned idea perfect for a fun coding project. Soon you realize its potential: charging $5, $10, or $15 monthly subscriptions, or offering pay-per-generation credits could turn this into a profitable business.

Let’s say you vibe coded and deployed the application, and it’s now serving customers and generating revenue.

You’re thrilled, your dream has become reality (Right?).

Then suddenly, a customer complaint arrives: a generated story terrified their four-year-old child so severely that they need psychological help, and now you’re facing potential legal action. What could’ve happened if it was never meant to cause any harm?

Did you assess the risks of your application or idea? “Nah, that’s boring”… until it’s not.

Probability/Severity Harms Matrix.

We’re increasingly seeing companies where just a handful of people armed with AI tools have deployed applications generating millions in annual revenue.

But there’s more to these success stories than meets the eye. Behind the scenes, there’s often either a non-technical founder who partnered with an experienced engineer, an “investor” firm/person that recognized a promising business idea and ensured its technical viability, or an amazing technical individual who knew how to put the idea together and successfully make it happen.

We really don’t know until we dig deep into the company and their trajectory. But this emphasizes the idea that while vibe coding can get us somewhere, there are certain things that we could be overlooking.

In these successful companies and examples, there was possibly/likely and hopefully some form of risk assessment in place.

This matrix has long been part of risk management processes, and just taking the concept to our current AI vibe coding landscape, because sometimes it is easy to read about a principle but not understanding how to apply it.

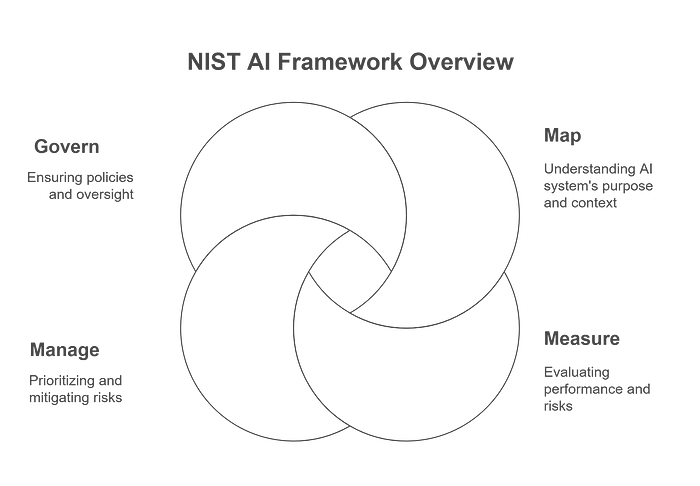

One more time, this is not a blog about risk management. This is just one tool within the broader scope of risk management that everybody should be thinking about, whether you’re vibe coding or not, especially in the era of probabilistic models that could have an adverse effect on the functionality of your system. Find out more information by reading about the different risk management frameworks or methodologies, like the NIST AI framework, ISO Standards, IEEE, etc.

But What Is It?

Think of this matrix as a visual tool that assesses both the likelihood (probability) and impact (severity) of potential risks materializing in your AI application. While you can use either quantitative or qualitative scoring systems, what truly distinguishes an effective matrix from an ineffective one is context. The matrix must be customized to your specific organization, application, and business environment to be useful. A one-size-fits-all approach simply won’t work.

The matrix in here (below) combines both qualitative and quantitative measures. However, you should customize it to fit your organization and specific AI project needs. You could have metrics only for qualitative or only for quantitative measures. You could do a weighting score or something like you see below. Something that makes sense in your project. The AI context is crucial when creating the matrix, as it enables you and your team to properly assess the risks associated with your application.

Understanding the Matrix Axes

Let’s break down the two key dimensions of our risk assessment matrix:

Probability (X-axis): How likely is it to happen?

Think of this as a scale from “almost never” to “almost certain”

We use five levels: Almost Certain → Likely→ Possible→ Unlikely→ Rare

We determine this using past data, expert insights, and careful analysis

The AI Context to determine the probability extends beyond general estimations. It requires careful consideration of several factors:

- Quality and representativeness of training data: how accurate, complete, consistent, and reflective the data used to train the AI model is of the real-world environment where the AI will operate. If you are testing it dev and see the model not outputting consistent characters or stories despite your good algo or prompt, then think twice about using it.

- Complexity and opacity of model architecture: think how intricate the AI model/application internal workings are (e.g., a deep neural network with many layers while opacity refers to the difficulty in understanding how the model arrives at its decisions(i.e. black box).

- Vulnerability to adversarial attacks: this is self explanatory but think how susceptible the AI model/application is to being intentionally tricked or manipulated by specially crafted inputs designed to cause malfunctions or extract sensitive information.

- Novelty of the AI application itself: how new or unprecedented the AI application, its intended use case, or the environment it’s deployed in is.

Severity (Y-axis): How bad would it be if it happened?

Think in ranges from “barely noticeable” to “catastrophic” or something that makes sense

Five levels: Negligible → Minor → Moderate → Major → Catastrophic

You should look at everything from financial losses to user safety and reputation damage

The severity of the situation, should it materialized, must be a well throughout process, comprehensive and encompassing, that cannot be taken lightly, either. Some of the things to consider if the event happens are:

- Financial losses and operational disruptions

- Reputational damage

- Regulatory penalties

- Threats to safety (physical injury or death)

- Infringements on civil liberties and rights

- Privacy violations

- Environmental damage

- Psychological harm to individuals (see example below)

Fictional & Practical Example

Dream Weaver AI: Multi-Dimensional Severity Framework

Let’s go back to the initial ideal we had on creating an app for bedtime stories.

Picture this: The Dream Weaver AI team gathers around a virtual whiteboard, faced with a challenging scenario.

A story their AI created has promoted harmful stereotypes, and they know they can’t just slap a simple “high risk” label on it and move on. Instead, they dive deep, examining this potential harm through multiple lenses.

For each dimension, Dream Weaver, might use a scale like: Negligible, Minor, Moderate, Major, Catastrophic as we saw above.

So what could be so bad about an AI generating the wrong type of image/story, you ask?

When considering harm to people, particularly in the context of the app and target population (i.e. children aged 3–7 years old and their parents/guardians), psychological harm to children presents a spectrum of concerns.

At the lowest level, a story might be slightly confusing or uninteresting, causing no lasting impact. Minor issues could include momentary boredom or mild confusion. All “good” here, but thing about the reputational harm, lack of interest, refunds, subscription drops, etc. Not good either.

More moderate concerns arise when content generates temporary fear or anxiety, such as unresolved perilous situations or overly complex concepts that might require brief parental comfort. Now you should start to sweat a bit if a claims comes your way or bad publicity sets off, not to think about the marketplace (if mobile app) rating can do to your listing.

More serious issues emerge when stories are clearly age-inappropriate, potentially causing nightmares, prolonged distress, or negative self-perceptions that lead to parental concern and app avoidance. Definitely, brace for impact.

At the most severe level, stories could generate deeply traumatizing content with violent imagery, existential themes, or inappropriate adult content that requires professional intervention. Better call Saul (i.e. lawyer)!

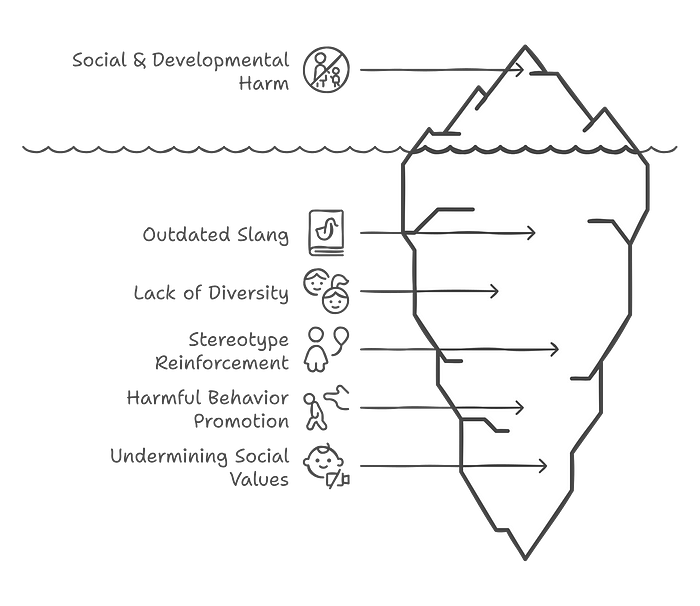

Let’s consider another dimension: Social & Developmental Harm to Children.

At the negligible level, stories might simply use outdated slang, while minor issues emerge when content lacks diversity in characters or settings. More concerning moderate cases involve consistent reinforcement of gender stereotypes and cultural biases that could shape a child’s understanding of social roles. Major harm occurs when stories actively promote harmful stereotypes or unsafe behaviors, leading children to mimic these problematic patterns. At the catastrophic level, content systematically undermines positive social values, promoting discrimination and distorting children’s understanding of healthy relationships and consent in fundamentally damaging ways.

When considering harm to the enterprise or organization (in this case, Dream Weaver AI Company), reputational damage can range significantly. At the negligible level, the company might face a few isolated negative comments or reviews about story repetitiveness. Minor issues could include a small number of public complaints on app stores about boring content or glitches. As concerns escalate to moderate, the company might encounter negative feedback on social media or parent forums about mildly inappropriate or scary content, along with minor negative press coverage. Major reputational damage would involve widespread negative media coverage, viral social media campaigns by concerned parents, plummeting app store ratings, and calls for boycotts. At the catastrophic level, Dream Weaver AI could become a cautionary tale of “AI gone wrong for kids,” leading to irreparable brand damage, widespread condemnation, and complete loss of stakeholder trust.

This is a simple but hopefully comprehensive way of seeing and using the matrix, demonstrating how it can be effectively applied to evaluate potential risks. While the example shown here focuses on specific use cases, the fundamental principles of probability and severity assessment can be adapted to analyze risks across various AI applications and scenarios.

I don’t mean to suggest that vibe coding isn’t valuable — it has solved many problems and reduced many pain points. However, we should be thoughtful about relying on vibe coding without considering the consequences, best engineering practices, and proper risk management.

The goal is to harness AI advancements in a way that is useful and respectful.

When tackling your next vibe coding project, take a step back and consider all possibilities. Use tools like this matrix to evaluate the risks of working with third-party models, fine-tuned models, or even models you’ve created yourself. This is especially important for models you’ve developed in-house.

Cheers!

Disclaimer:

The views and opinions expressed in this blog are solely my own and are intended for educational and informational purposes only. They do not constitute legal, financial, or business advice. Readers are encouraged to seek independent professional advice before applying any ideas or suggestions discussed herein. Any reliance you place on the information provided is strictly at your own risk.